低广播时延已成为头端站和CDN建设招标和竞赛的强制性要求。以前,此类标准仅适用于体育广播,但现在运营商要求广播设备供应商在各个领域提供低延迟:广播新闻、音乐会、表演、采访、脱口秀、辩论、电子竞技和赌博。

一般来说,延迟是指设备(摄像机、播放器、编码器等)捕获特定视频帧的时间与在最终用户的显示器上播放该帧的时间之间的时间差。

低延迟不应降低信号传输的质量,这意味着编码和复用时需要最少的缓冲,同时在任何设备的屏幕上保持流畅清晰的图像。另一个先决条件是保证传输:所有丢失的数据包都应恢复,并且开放网络上的传输不应造成任何问题。

越来越多的服务正在迁移到云端,以节省租用场地、电力和硬件成本。这增加了对低延迟和高 RTT(往返时间)的要求。在高清和超高清视频广播期间传输高比特率时尤其如此 - 例如,如果云服务器位于美国而内容消费者位于欧洲。

在本次评论中,我们将分析低延迟广播方面的当前市场报价。

UDP协议

现代电视广播中广泛使用并与“低延迟”一词相关的第一项技术可能是基于 UDP 的 MPEG 传输流内容的多播广播。通常,这种格式是在封闭的无负载网络中选择的,其中丢失数据包的可能性最小化。例如,从编码器到头端站(通常在同一服务器机架内)的调制器进行广播,或者通过带有放大器和中继器的专用铜线或光纤线路进行 IPTV 广播。该技术得到普遍使用,并表现出出色的延迟。在我们的市场上,国内公司使用以太网在每秒 25 帧的情况下实现了不超过 80 毫秒的编码、数据传输和解码延迟。当帧速率较高时,此特性甚至更小。

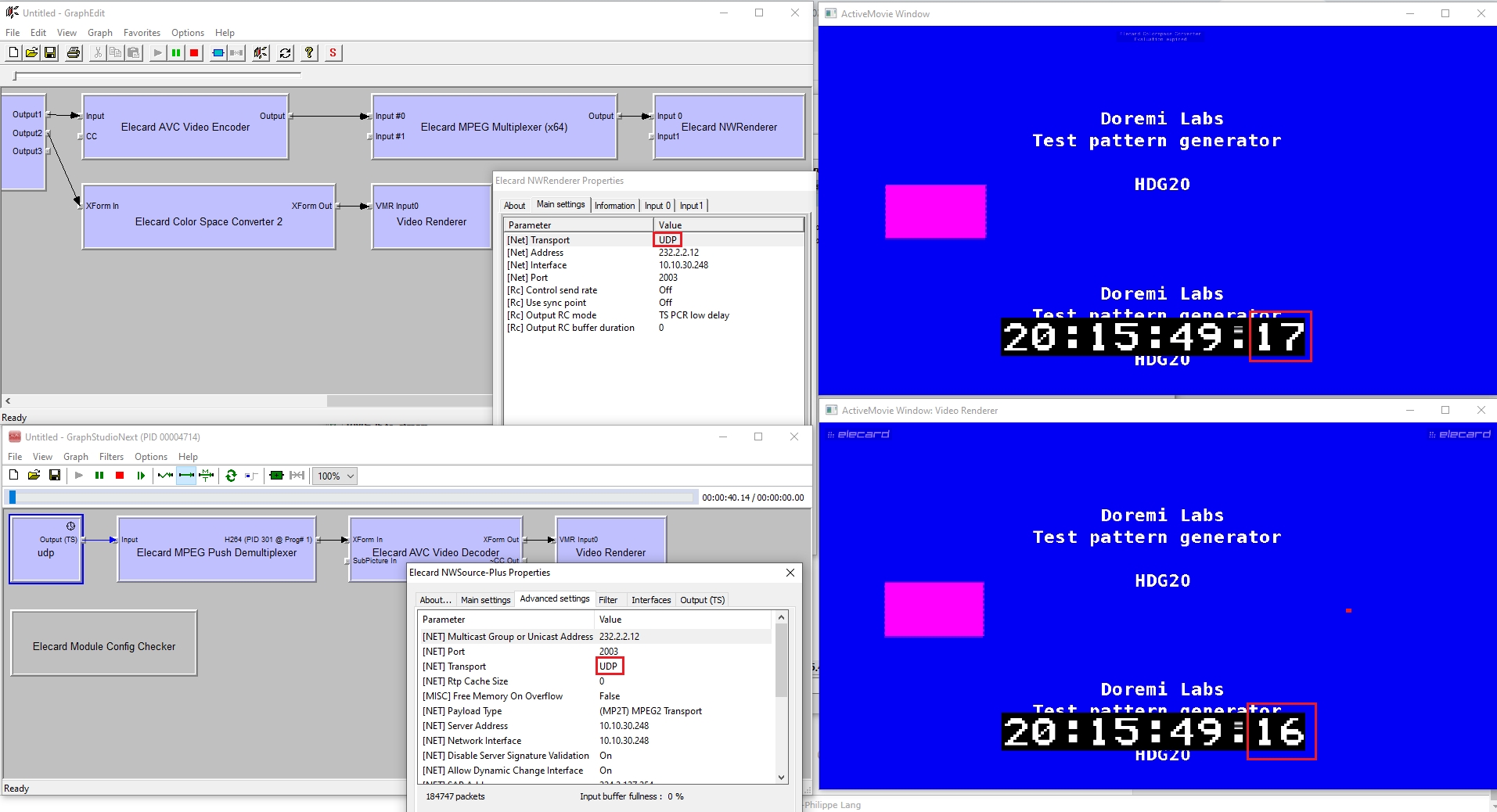

图 1. 实验室中的 UDP 广播延迟测量

第一张图显示了来自 SDI 采集卡的信号。第二张图片说明了经过编码、复用、广播、接收和解码阶段的信号。正如您所看到的,第二个信号晚了一个单位到达(在本例中为 1 帧,即 40 毫秒,因为每秒有 25 帧)。 2017 年联合会杯和 2018 年 FIFA 世界杯使用了类似的解决方案,仅在整个架构链中添加了调制器、分布式 DVB-C 网络和电视作为终端设备。总延迟为 220–240 毫秒。

如果信号经过外部网络怎么办?有各种问题需要克服:干扰、整形、流量拥塞通道、硬件错误、电缆损坏和软件级问题。在这种情况下,不仅需要低延迟,还需要重传丢失的数据包。就 UDP 而言,带有冗余的前向纠错技术(带有额外的测试流量或开销)效果很好。同时,对网络吞吐率的要求不可避免地增加,因此,延迟和冗余级别也随之增加,具体取决于预期的丢失数据包百分比。由于 FEC 恢复的数据包百分比始终是有限的,并且在开放网络上传输期间可能会发生很大变化。因此,为了长距离可靠地传输大量数据,有必要为其添加大量的超额流量。

传输控制协议

让我们考虑基于 TCP 协议(可靠传输)的技术。如果接收到的数据包的校验和与预期值(在 TCP 数据包标头中设置)不匹配,则重新发送该数据包。如果客户端和服务器端不支持选择性确认 (SACK) 规范,则将重新发送整个 TCP 数据包链 - 从丢失的数据包到以较低速率接收的最后一个数据包。

此前,直播低时延时会避免使用 TCP 协议,因为错误检查、数据包重发、三向握手、“慢启动”和防止通道溢出(TCP 慢启动和拥塞避免阶段)导致延迟增加。 )。同时,即使信道很宽,传输开始之前的延迟也可能达到往返时间(RTT)的五倍,而吞吐量的增加对延迟的影响很小。

此外,使用 TCP 广播的应用程序对协议本身没有任何控制(其超时、重新广播的窗口大小),因为 TCP 传输是作为单个连续流实现的,并且在发生错误之前应用程序可能会“冻结”无限期的时间。并且更高级别的协议不具备配置 TCP 来最小化广播问题的能力。

同时,即使在开放网络和长距离中,也有一些协议可以通过 UDP 高效工作。

让我们考虑并比较各种协议的实现。在基于 TCP 的协议和数据传输格式中,我们注意到 RTMP、HLS 和 CMAF,在基于 UDP 的协议和数据传输格式中,我们注意到 WebRTC 和 SRT。

实时MP

RTMP 是 Macromedia 专有协议(现为 Adobe 所有),在基于 Flash 的应用程序流行时非常流行。它有多种支持 TLS/SSL 加密,甚至支持基于 UDP 的变体,即 RTFMP(实时媒体流协议,用于点对点连接)。 RTMP 将流分割成大小可以动态变化的片段。在通道内部,与音频和视频相关的数据包可以被交织和复用。

图2. RTMP广播实现示例

RTMP形成多个虚拟通道,在其上传输音频、视频、元数据等。大多数 CDN 不再支持 RTMP 作为向最终客户端分发流量的协议。但是,Nginx 有自己的 RTMP 模块,支持普通 RTMP 协议,该协议运行在 TCP 之上并使用默认的 1935 端口。 Nginx 可以充当 RTMP 服务器并分发从 RTMP 流媒体接收的内容。此外,RTMP 仍然是向 CDN 传送流量的流行协议,但将来流量将使用其他协议进行流式传输。

如今,Flash 技术已经过时,而且实际上不受支持:浏览器要么减少其支持,要么完全阻止它。 RTMP 不支持 HTML5,并且无法在浏览器中工作(通过 Adobe Flash 插件播放)。为了绕过防火墙,他们使用 RTMPT(封装到 HTTP 请求中并使用标准 80/443 而不是 1935),但这会显着影响延迟和冗余(根据各种估计,RTT 和总体延迟增加了 30%)。 RTMP 仍然很受欢迎,例如在 YouTube 或社交媒体(Facebook 的 RTMPS)上进行广播。

RTMP 的主要缺点是缺乏 HEVC/VP9/AV1 支持以及仅允许两个音轨的限制。此外,RTMP 在数据包标头中不包含时间戳。 RTMP 仅包含根据帧速率计算的标签,因此解码器不知道何时解码该流。这需要接收组件均匀地生成用于解码的样本,因此必须根据数据包抖动的大小来增加缓冲区。

另一个 RTMP 问题是丢失 TCP 数据包的重新发送,如上所述。接收确认 (ACK) 不会直接发送至发送方,以保持较低的返回流量。仅在收到数据包链后,才会向广播方发送肯定 (ACK) 或否定 (NACK) 确认。

根据各种估计,在完整的编码路径(RTMP 编码器 → RTMP 服务器 → RTMP 客户端)下,使用 RTMP 进行广播的延迟至少为两秒。

CMAF

通用媒体应用格式(CMAF)是由苹果和微软委托 MPEG(运动图像专家组)开发的一种协议,用于通过 HTTP 进行自适应广播(具有根据整个网络带宽速率变化而变化的自适应比特率)。通常,Apple 的 HTTP Live Streaming (HLS) 使用 MPEG 传输流,而 MPEG DASH 使用分段 MP4。 2017年7月,CMAF规范发布。在 CMAF 中,碎片 MP4 片段 (ISOBMFF) 通过 HTTP 传输,并针对特定播放器的相同内容使用两个不同的播放列表:iOS (HLS) 或 Android/Microsoft (MPEG DASH)。

默认情况下,CMAF(如 HLS 和 MPEG DASH)不是为低延迟广播而设计的。但对低延迟的关注和兴趣不断增长,因此一些制造商提供了标准的扩展,例如低延迟 CMAF。此扩展假设广播方和接收方都支持两种方法:

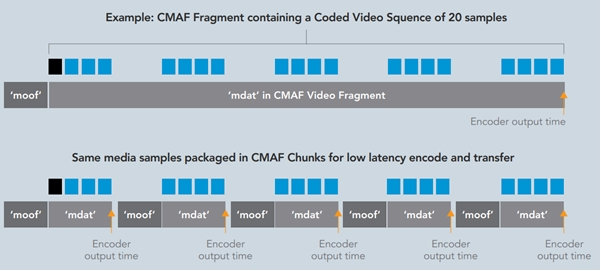

- 块编码:将片段分成子片段(带有moof + mdat mp4盒的小片段,最终组成适合播放的整个片段)并在整个片段放在一起之前发送它们;

- 分块传输编码:使用 HTTP 1.1 将子段发送到 CDN(源):每 4 秒仅发送整个段的 1 个 HTTP POST 请求(每秒 25 帧),此后可能会发送 100 个小片段(每个片段一帧)在同一个会话中发送。播放器还可能尝试下载不完整的片段,而 CDN 反过来使用分块传输编码提供完成的部分,然后保持连接,直到新片段添加到正在下载的片段中。当整个Segment在CDN侧形成(启动)后,Segment到播放器的传输就会完成。

图 3. 标准和分段 CMAF

要在配置文件之间切换,需要缓冲(至少 2 秒)。考虑到这一点以及潜在的交付问题,该标准的开发人员声称潜在的延迟不到三秒。与此同时,诸如通过 CDN 进行扩展、同时支持数千个客户端、加密(以及通用加密支持)、HEVC 和 WebVTT(字幕)支持、保证交付以及与不同播放器(Apple/Microsoft)的兼容性等杀手级功能都得以保留。在缺点中,人们可能会注意到播放器一方必须支持 LL CMAF(支持碎片片段和使用内部缓冲区进行高级操作)。然而,在不兼容的情况下,播放器仍然可以使用 CMAF 规范内的内容,并具有 HLS 或 DASH 典型的标准延迟。

低延迟 HLS

2019 年 6 月,Apple 发布了低延迟 HLS 规范。

它由以下组件组成:

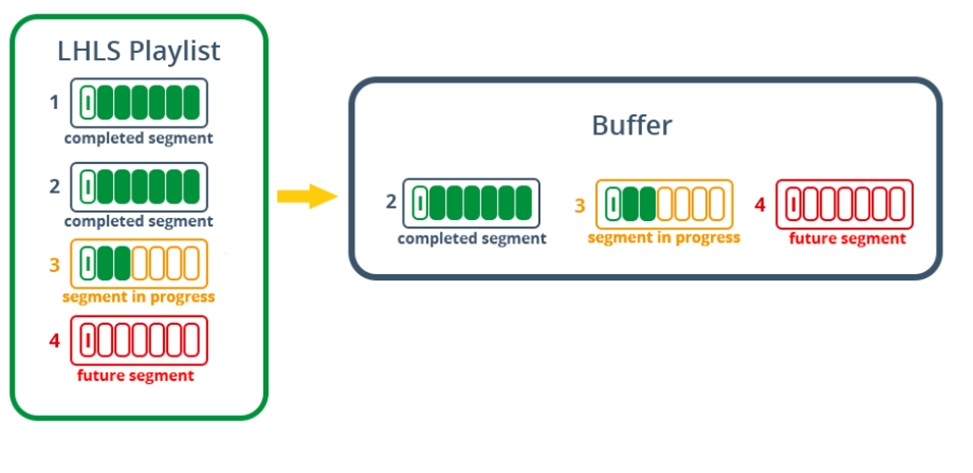

- 生成最短持续时间长达 200 毫秒的部分片段(碎片 MP4 或 TS),甚至在由此类部分(x 部分)组成的整个片段(块)完成之前就可用。过时的部分片段会定期从播放列表中删除。

- 服务器端可以使用HTTP/2推送模式来发送更新的播放列表以及新的片段(或片段)。然而,在 2020 年 1 月规范的最后一次修订中,这一建议被排除在外。

- 服务器的责任是保留请求(块),直到包含新片段的播放列表版本可用。阻止播放列表重新加载可以消除轮询。

- 发送播放列表中的差异(也称为增量)而不是完整的播放列表(保存默认播放列表,然后仅在增量差异/增量 - x 跳过 - 出现时发送它,而不是发送完整的播放列表)。

- 服务器宣布即将推出新的部分段(预加载提示)。

- 有关播放列表的信息在相邻配置文件(呈现报告)中并行加载,以实现更快的切换。

图 4. LL HLS 运行原理

在 CDN 和播放器完全支持该规范的情况下,预期延迟小于三秒。 HLS因其出色的可扩展性、加密和自适应比特率、支持跨平台功能以及向后兼容而被广泛应用于开放网络中的广播,如果播放器不支持LL HLS,这将非常有用。

网络RTC

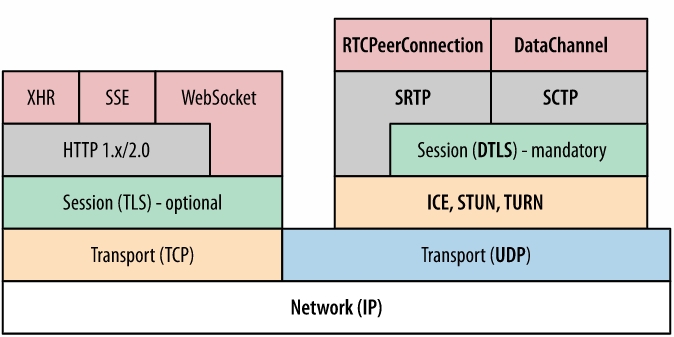

Web实时通信(WebRTC)是Google于2011年开发的开源协议。它用于Google远程桌面、Google Duo、Google Meet、Zoom、Hangouts、Youtube live(вещание из браузера)、Stadia云游戏。 WebRTC 是一组标准、协议和 JavaScript 编程接口,通过点对点连接中的 DTLS-SRTP 实现端到端加密。此外,该技术不使用第三方插件或软件,穿过防火墙时不会造成质量损失和延迟(例如,在浏览器中进行视频会议期间)。广播视频时,通常使用基于 UDP 的 WebRTC 实现。

该协议的工作原理如下:主机向要连接的对等方发送连接请求。在建立对等点之间的连接之前,它们通过第三方(信号服务器)相互通信。然后,每个对等点通过询问“我是谁?”来接近 STUN 服务器。 (如何从外部联系我?)。同时,还有公共的 Google STUN 服务器(例如 stun.l.google.com:19302)。 STUN 服务器提供可访问当前主机的 IP 和端口列表。 ICE 候选人是从此名单中形成的。第二面也做同样的事情。 ICE候选通过信号服务器进行交换,并且正是在这个阶段建立对等连接,即形成对等网络。

如果无法建立直接连接,则所谓的 TURN 服务器充当中继/代理服务器,该服务器也包含在 ICE 候选列表中。

SCTP(应用数据)和SRTP(音频和视频数据)协议负责复用、发送、拥塞控制和可靠传送。对于“握手”交换和进一步的流量加密,使用了 DTLS。

图 5. WebRTC 协议栈

使用 VP8、VP9、H.265、AV1 视频编解码器和 Opus、G.711、iLBS、iSAC 音频编解码器。支持的最大分辨率:4K,每秒 60 帧。

WebRTC 技术在安全性方面的缺点是即使在 NAT 后面以及使用 Tor 网络或代理服务器时也定义了真实 IP。由于连接架构的原因,WebRTC 并不适合大量同时观看的对等点(很难扩展),而且目前 CDN 很少支持它。不同供应商基于WebRTC的解决方案几乎总是彼此不兼容,因为他们使用自己的实现。最后,WebRTC 在编码质量和最大传输数据量方面不如其同事。

谷歌声称的延迟不到一秒。同时,该协议不仅可以用于视频会议,还可以用于例如文件传输。

SRT

安全可靠传输(SRT)是Haivision于2012年开发的协议。该协议基于UDT(基于UDP的数据传输协议)和ARQ数据包恢复技术运行。它支持 AES-128 和 AES-256 加密。除了listener(服务器)模式外,它还支持caller(客户端)和rendezvous(双方发起连接时)模式,允许通过防火墙和NAT建立连接。 SRT 中的“握手”过程是在现有安全策略内执行的,因此允许外部连接,而无需在防火墙中打开永久外部端口。

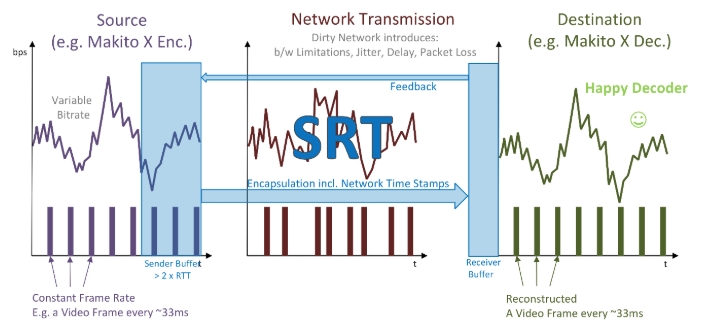

SRT 在每个数据包内包含时间戳,允许以等于流编码速率的速率播放,而不需要大量缓冲,同时调整抖动(不断变化的数据包到达速率)和传入比特率。与 TCP 不同,TCP 中一个数据包的丢失可能会导致从丢失的数据包开始重新发送整个数据包链,SRT 通过其编号来识别特定数据包,并仅重新发送该数据包。这对延迟和冗余有积极的影响。数据包以比标准广播更高的优先级重新发送。与标准 UDT 不同,SRT 完全重新设计了重新发送数据包的架构,以便在数据包丢失时立即响应。该技术是选择性重复/拒绝 ARQ 的变体。值得注意的是,特定的丢失数据包只能重发固定次数。当数据包上的时间超过总延迟的 125% 时,发送方会跳过该数据包。 SRT 支持 FEC,用户自己决定使用这两种技术中的哪一种(或同时使用两者)来平衡最低延迟和最高交付可靠性之间的关系。

图 6. 开放网络中 SRT 运行原理

SRT中的数据传输可以是双向的:两个点可以同时发送数据,也可以既充当监听者(listener)又充当发起连接的一方(调用者)。当双方都需要建立连接时,可以使用会合模式。该协议具有一种内部复用机制,允许使用一个 UDP 端口将一个会话的多个流复用为一个连接。 SRT还适用于快速文件传输,这一点在UDT中首次引入。

SRT具有网络拥塞控制机制。每 10 毫秒,发送方就会收到 RTT(往返时间)的最新数据及其变化、可用缓冲区大小、数据包接收速率和当前链路的大致大小。连续发送的两个数据包之间的最小增量有限制。如果无法及时交付,则会从队列中删除。

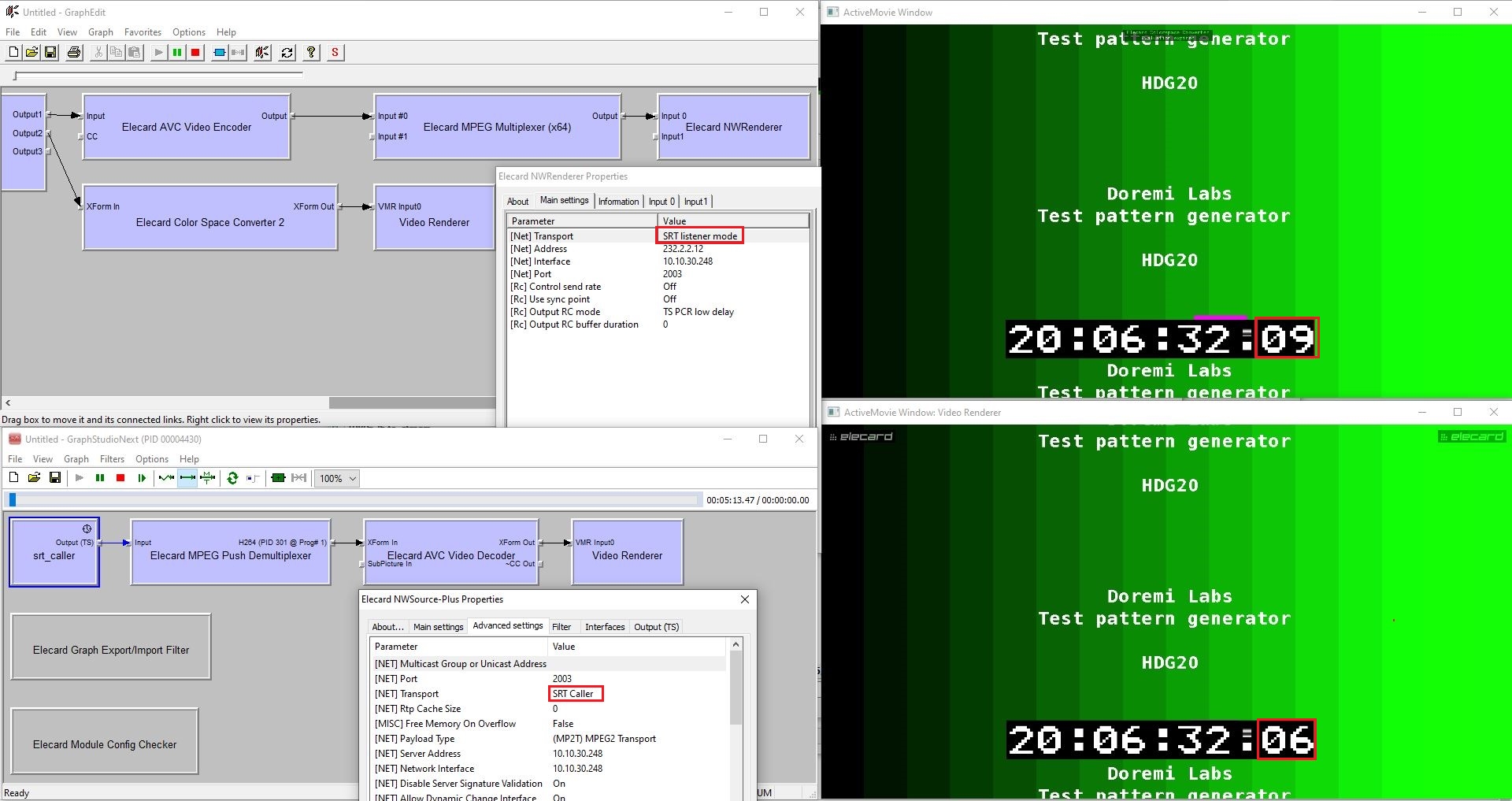

开发人员声称,SRT 可实现的最小延迟为 120 毫秒,并具有在封闭网络中短距离传输的最小缓冲区。稳定广播的建议总延迟为 3–4 RTT。此外,SRT 比其竞争对手 RTMP 更好地处理长距离(几千公里)和高比特率(10 Mbps 或更高)的传输。

图 7. 实验室中的 SRT 广播延迟测量

在上面的示例中,实验室测量的 SRT 广播延迟为 3 帧(每秒 25 帧)。即,40 毫秒 * 3 = 120 毫秒。由此我们可以得出结论,在UDP广播中可以实现的0.1秒级别的超低延迟在SRT广播中也可以实现。 SRT 的可扩展性与 HLS 或 DASH/CMAF 不在同一水平,但 SRT 受到 CDN 和转发器(转发器)的大力支持,并且还支持通过媒体服务器以侦听器模式直接向终端客户端广播。

2017 年,Haivision 公开了 SRT 库的源代码,并创建了 SRT 联盟,该联盟由 350 多个成员组成。

概括

作为总结,提供了以下协议比较表:

| 协议 | 实时MP | 网络RTC | LL CMAF | LL HLS | 选择性RT |

| 功能 | |||||

| 潜伏 | ≥ 2 秒 | < 1 秒 | ≥ 2,5 秒 | ≥ 2,5 秒 | ≥ 120 毫秒 |

| 吞吐能力 | 平均的 | 平均的 | 高的 | 高的 | 高的 |

| 可扩展性 | 低1 | 低的 | 高的 | 高的 | 中等的 |

| 跨平台功能及厂商支持 | 低2 | 高3 | 高的 | 高的 | 高的 |

| 自适应比特率 | 不 | 是的 | 是的 | 是的 | 不 |

| 加密支持 | 是的 | 是的 | 是的 | 是的 | 是的 |

| 能够穿越防火墙和NAT | 低的 | 中等的 | 高的 | 高的 | 中等的 |

| 冗余 | 中等的 | 低的 | 中等的 | 中等的 | 低的 |

| 支持多种编解码器 | 不 | 是的 | 是的 | 是的 | 是的 |

1 CDN 不支持向最终用户交付。支持内容流式传输到最后一英里,例如流式传输到 CDN 或转发器。

2 浏览器不支持

3 Safari 不支持

如今,一切开源且有据可查的东西都在迅速流行。可以认为,WebRTC 和 SRT 等格式在各自的应用领域具有长远的未来。在最小延迟方面,这些协议已经超过了 HTTP 上的自适应广播,同时保持了可靠的传输、低冗余并支持加密(SRT 中的 AES 和 WebRTC 中的 DTLS/SRTP)。此外,最近 SRT 的“小兄弟”(根据协议的年龄,但不是在功能和能力方面)RIST 协议越来越受欢迎,但这是一个单独审查的主题。与此同时,RTMP正在被新的竞争对手积极挤出市场,并且由于缺乏浏览器的原生支持,它在不久的将来不太可能得到广泛使用。

支持 RTMP、HLS、SRT 协议的Elecard CodecWorks 实时编码器的免费演示。

作者

作者

维塔利•苏图里欣

Elecard 集成与技术支持部主管

2020 年 6 月 4 日