Тranscoding video is a very resource-intensive task. It can be fairly expensive to run it on the CPU, especially with the growth of OTT broadcasting, where there are multiple profiles per channel. GPUs can be used to save resources. This article discusses the main advantages and drawbacks of these solutions, using Intel’s QuickSync and Nvidia’s NVENC as examples.

It is interesting to note that while technically being competitors in the field of video coding, both companies have been growing in parallel and even collaborating in the production of new chips.

First, we have to decide what graphics hardware we are going to compare. We are only going to consider stable solutions that are capable of operating 24/7—this is a must in TV broadcasting.

With Intel, it is simple: we will just take one of the latest-generation Xeon Coffee Lake CPUs, Intel® Xeon® E-2246G processor, with an integrated Intel® UHD Graphics P630 GPU.

With Nvidia, things are a little bit more complicated. Our pick is Quadro RTX 4000 (8GB) which is a server counterpart for GeForce RTX 2070 Super (8GB) but, unlike RTX, it does not officially limit concurrent processing to 3 streams. It is indeed possible to remove this limitation by installing a patch created by ingenious types on the internet, but, as we noted earlier, we will only consider a stable, proven, official solution. We have excluded older video card versions because they are guaranteed to lag in HEVC coding performance due to the missing B-frame coding capability.

Let us now specify the platforms with the chosen graphics solutions.

| CPU | Intel® Xeon® E-2246G | Intel® Xeon® E-2224 |

| Video card | Integrated graphics Intel® UHD Graphics P630 | Quadro RTX 4000 (8GB) |

| RAM | 2 х 8 GB (dual-channel support is important) | 16 GB |

| HDD or SSD | 128 + GB | 128 + GB |

| Supplier price | around $1500 | around $3000 |

Maximum possible number of transcoded channels

Now let’s have a look at the numbers. These are results of a load test for the maximum possible number of transcoded channels (in the "fastest" mode).

| Transcoding AVC FHD 10 Mbps to AVC FHD 8 Mbps @ 30 fps | Transcoding AVC FHD 10 Mbps to HEVC FHD 5 Mbps @ 30 fps | |

| Intel | Up to 12 channels | Up to 13 channels |

| Nvidia | Up to 24 channels | Up to 14 channels |

In this comparison, Nvidia has twice the performance of Intel at AVC transcoding and is almost as fast at HEVC transcoding.

Price per channel including server costs

Now that we know the maximum possible number of FHD (1920x1080) channels per server configured with integrated Intel graphics or the Nvidia video card, we can calculate the price per FHD channel from the known server prices.

| Price per transcoded AVC channel | Price per transcoded HEVC channel | |

| Intel | $ 125 | $ 115 |

| Nvidia | $ 125 | $ 214 |

It turns out that there is no difference in price, and hence the 2x performance advantage of Nvidia is completely offset by the price of one platform. For HEVC channels, Nvidia is much more expensive per channel and platform when aiming for the maximum number of channels—that is, when using the fastest encoding algorithms and sacrificing quality.

Leaving the calculations aside for a moment, we will now address the issue of quality, as it is much fairer to compare equally acceptable quality rather than the quality obtained in fast modes.

Resultant quality of output vs. input stream

Another notable factor to consider is video compression quality—after all, there is no point in having many channels if their quality is not acceptable.

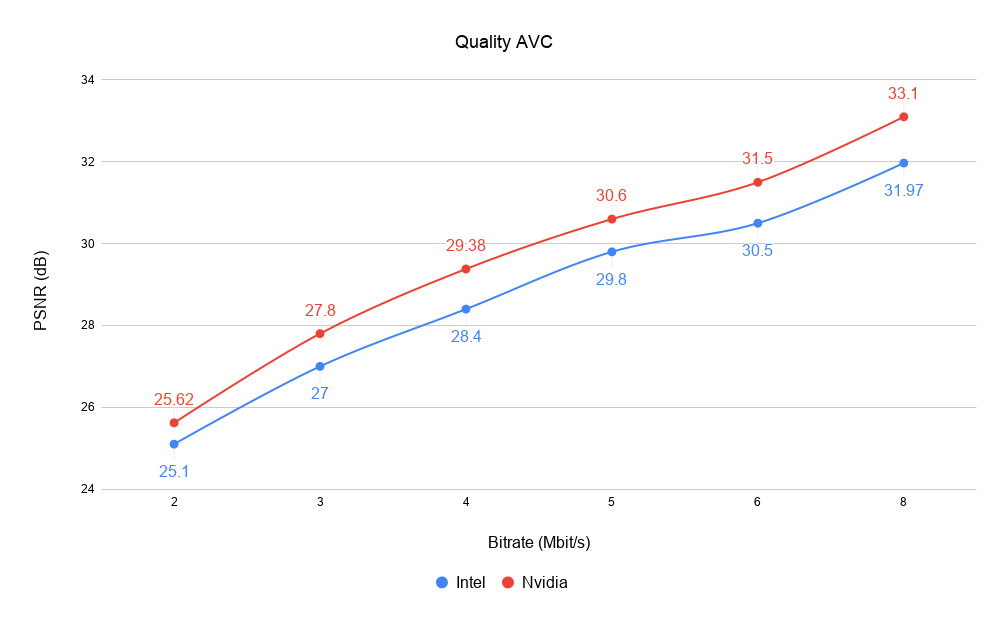

A quality comparison graph based on the PSNR metric is presented below, with Intel AVC vs. the input stream shown in blue and Nvidia AVC vs. the input stream in red.

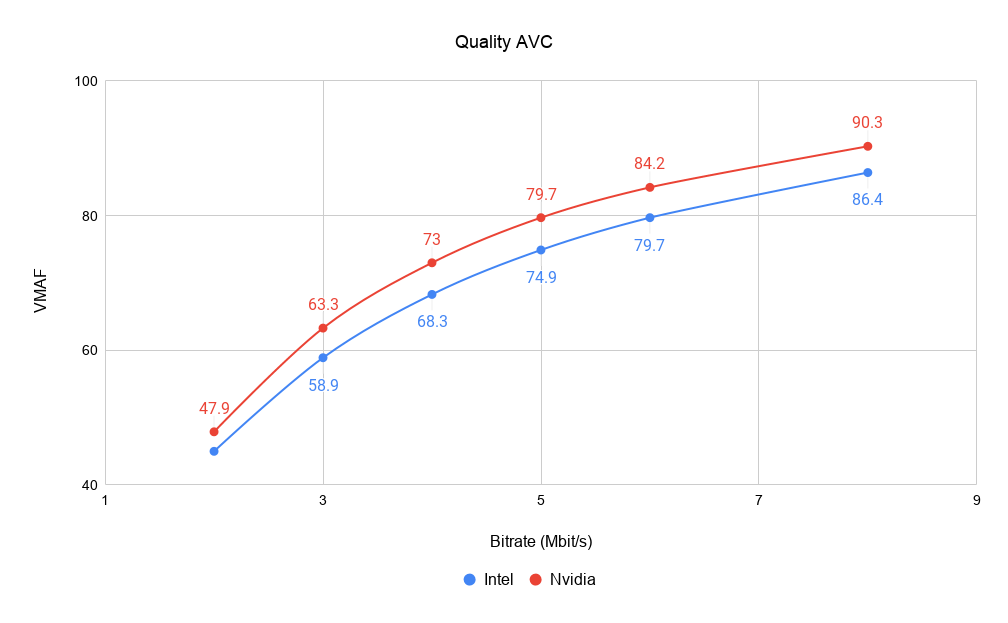

We can see from the graph that the resultant stream quality is virtually identical for both solutions. Let us compare the streams using VMAF metric.

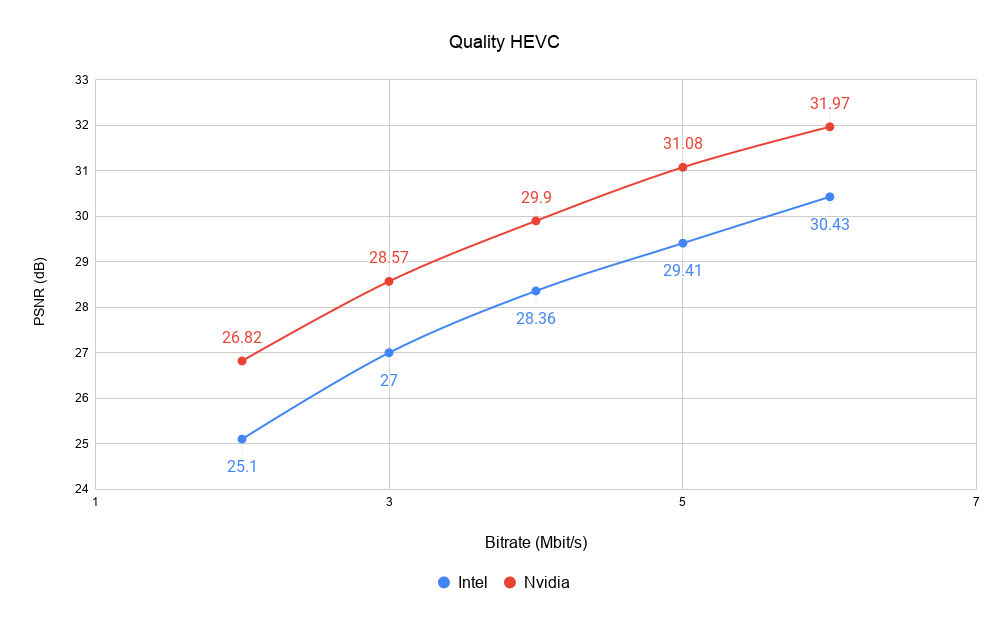

The next graph compares quality of Intel and Nvidia HEVC streams with that of the input stream, shown in blue and red, respectively.

This graph tells us that our comparison was not entirely valid, because the maximum possible number of transcoded channels for Nvidia is 14 at almost 2 dB higher quality than that of the 13 Intel channels.

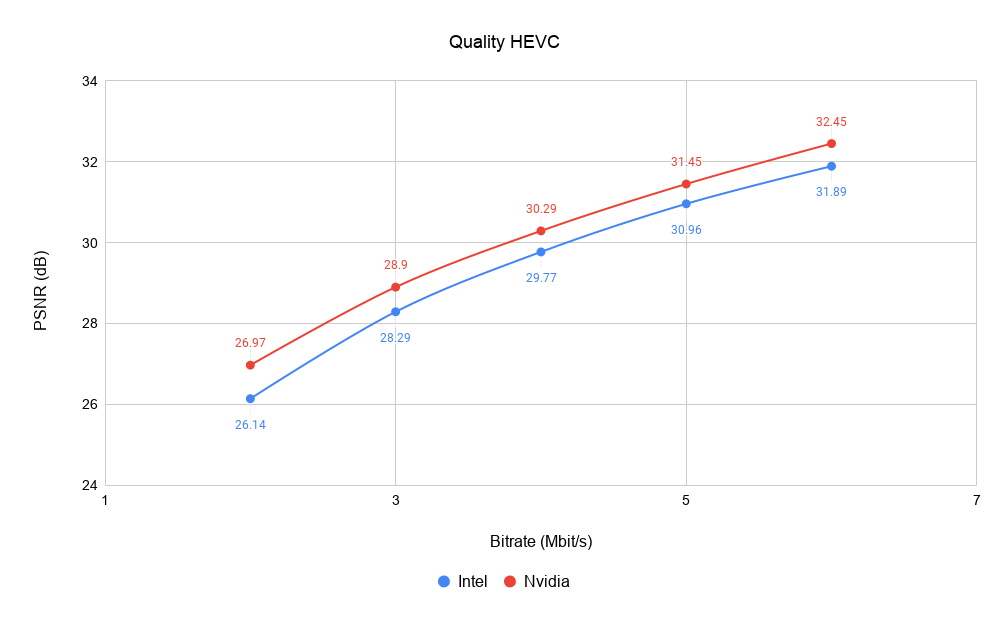

Therefore we carried out several more measurements at the maximum possible quality on Nvidia and on Intel in GAcc mode (meaning "GPU Accelerated", which is when the encoding is performed with CPU support instead of relying solely on the GPU), and obtained the following results for Intel HEVC GAcc and Nvidia HEVC vs. the input stream, shown in blue and red, respectively:

The encoding quality was virtually identical, but the performance of both systems dropped to a fraction of the previous levels. This time, Nvidia could only transcode four FHD HEVC channels and Intel just two. Based on the new data, we can now recalculate the price per channel:

- $1500/2 = $750 per transcoded HEVC channel for Intel;

- $3000/4 = $750 per transcoded HEVC channel for Nvidia.

In fact, this is the same relative cost per channel as for AVC.

Power consumption at equal load

Let us consider yet another important factor in operating a production system: the platform’s power consumption. From our tests at maximum platform load we found that:

- The Nvidia platform consumes about 200 W.

- The Intel platform consumes about 75 W, but since it has half as many channels, we will multiply that value by 2 for a total of about 150 W.

As it turns out, the Nvidia platform consumes 50 W more while doing the same job.

Server rack space occupied

With large numbers of channels to transcode, the issue of physical server space often arises.

For Intel, special blade server platforms exist where one 3U server can accommodate 8 to 14 blades (full-fledged servers in a different form factor). One 3U platform is capable of transcoding up to 168 FHD AVC channels. With a regular rack server instead of a blade server, this number of channels would require a rack height of 14U.

The situation with Nvidia is more complicated in this respect because the video cards themselves take up additional space in the platform and are often bulky. With 1 video card per 1U server, 168 FHD AVC channels would consume 7U of rack space. It is possible to save on the cost per platform by installing several video cards on one platform, but this is unlikely to bring space savings, as accommodating 2 or 3 video cards would necessitate a platform of 3U or even 4U.

Specialized applications

In addition to video transcoding, there are other specialized tasks, such as decoding video for visual monitoring purposes or encoding the output from an SDI/NDI capture card. These applications are also much better served by an Intel-based solution, because they are often smaller in scale and hence it will be impossible to fully utilize the resources of the Nvidia platform. Even if you need to encode an SDI signal, it will take a handful of channels, and it is a seldom project that requires encoding up to 24 SDI signals. Moreover, it is going to be pretty hard to accommodate a PCI SDI capture card and a PCI video card on a 1U platform—you will have to choose a platform with a different rack height or find one that has enough space for two cards, which is a rarity.

IDecoding is less resource-intensive than transcoding, and thus, in theory, you could use Nvidia to monitor more than 24 FHD AVC channels visually. The practical limit amounts to 8 channels or so, because it is impossible to send more decoded (uncompressed) video data via the PCI bus. With Intel, this problem does not arise, as GPU is integrated with CPU.

In all fairness, it is worth noting here that Nvidia is more attractive for transcoding UHD content, since you can implement multi-profile transcoding on a single video card. Intel, on the other hand, is not capable of transcoding multi-profile UHD content on one GPU core and, therefore, requires a mechanism for distributing the stream between servers. This solution is known as distributed transcoding.

Conclusions

Based on this comparison, we can identify the main advantages of both solutions.

| Intel advantages |

|

| Nvidia advantages |

|

Having compared the two graphics solutions on all aspects of interest, we can now conclude that they are close competitors, and it is difficult to identify a clear winner.

The key consideration for choosing transcoding hardware could be the software vendor that utilizes any of them, given the high importance of aspects such as specific implementation of the platform tools offered by Intel/Nvidia and the advanced features of the entire hardware and software solution. Other aspects include the solution price, software features, warranty, history of successful projects, easy customization, SLA, competencies of maintenance engineers etc. For example, instead of Nvidia’s own software implementation of its platform tools, the Nvidia-based solution often includes an evaluation sample as part of the software package or an open implementation. While there is nothing wrong with an open source project as such, it has its disadvantages, like the inability to customize it with additional features or get a bug fix, because these implementations lack technical support and SLA.

Request free demo of Elecard CodecWorks live encoder based on Intel QuickSync and NVIDIA GPU acceleration.

Author

Author

Vadim Blinov

Product Manager of Elecard CodecWorks since 2016. He has 4 years of experience in the video encoding sphere.

November 19, 2020